Since we don't have one yet...

All I can offer is reviews of what they showed, no actual comparison tests

RX 7900 XTX - $USD 999 - https://www.amd.com/en/products/graphics/amd-radeon-rx-7900xtx

RX 7900 XT - $USD 899 (may be reduced) - https://www.amd.com/en/products/graphics/amd-radeon-rx-7900xt

XT only 85% the performance as an XTX

All the videos show same things so no point showing more

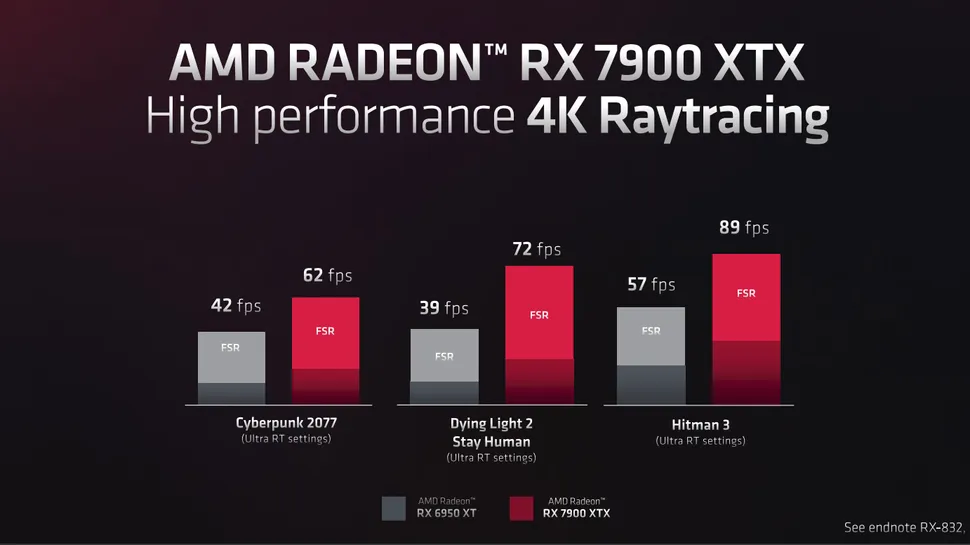

Seems some people expected AMD to catch Nvidia in RT, I never assumed that since they 1 generation behind. The XTX might make the 4080 look bad in price.

I was waiting for a 7800 xt assuming power draw would be the same or higher this year. Instead, the 7900 XT has same power draw as the 6800xt... so I am now looking at it.

All I can offer is reviews of what they showed, no actual comparison tests

RX 7900 XTX - $USD 999 - https://www.amd.com/en/products/graphics/amd-radeon-rx-7900xtx

RX 7900 XT - $USD 899 (may be reduced) - https://www.amd.com/en/products/graphics/amd-radeon-rx-7900xt

XT only 85% the performance as an XTX

All the videos show same things so no point showing more

Seems some people expected AMD to catch Nvidia in RT, I never assumed that since they 1 generation behind. The XTX might make the 4080 look bad in price.

I was waiting for a 7800 xt assuming power draw would be the same or higher this year. Instead, the 7900 XT has same power draw as the 6800xt... so I am now looking at it.

Last edited: